4 Reasons Direct-Response Tests End up Telling You Nothing

Sean claimed the other day that 91.645% of direct response fundraising tests are a waste of time.

“Dude,” I said, “that’s fake news. The real number is 91.625%!”

Either way, though, a lot of time, money, and energy is wasted in our industry on testing that gets nobody anywhere.

Let’s take a look at why testing goes wrong and what you can do to make it go right…

The test is too small

If the quantity of your test is too small, you are almost sure to fail to get results that you have statistical confidence in — that is, that you can be reasonably sure the same thing would happen again.

If your test consisted of two panels of 10 donors each, and one panel got 3 responses while the other got 6 — you could say one got 100% more response than the other. But it would be meaningless, because one panel getting 3 more than the other is just noise.

Statistical confidence comes from some combination of large-enough numbers in each test panel and a large-enough difference between the panels. Confidence is expressed as a percentage: If test results have 99% confidence, that means we can be 99% sure the same test will go the same way if run again. Testing experts generally seek 90% or 95% confidence to consider a test valid.

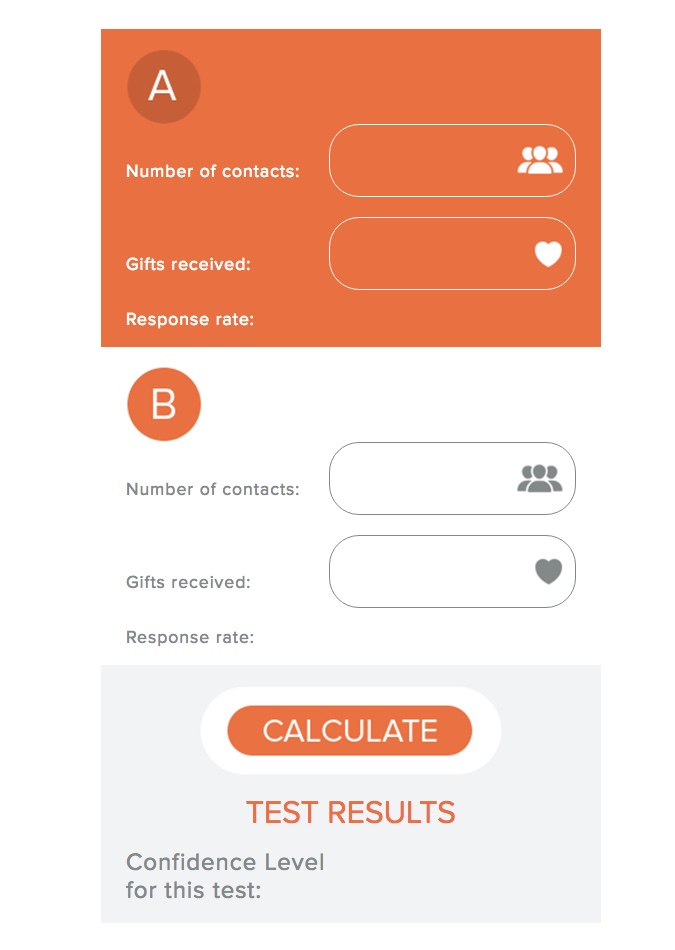

You can find out the confidence level of your test through a handful of calculators on the web. Our favorite is Mal Warwick Donordigital Statistical Calculator.

Use the calculator before you test by entering the numbers in each panel and reasonable/conservative projected responses. If the calculator doesn’t predict at least 90% confidence, you need to increase the size of the test panels. Or don’t bother with the test.

After you’ve run the test, use the calculator again. It’s possible to fail to reach confidence even if your panels are huge. That means the results were too close to call.

The test is too insignificant

You are unlikely to learn anything if your test is something like using a thick red line against a using a thin red line. Or changing the 9th word of the seventh paragraph from “big” to “large.” Things like that don’t move the needle much.

Direct-response testing is good at uncovering larger truths. Things like:

- What’s on the outer envelope

- The subject line

- The physical specs of a direct mail piece

- The offer

- Elements of the offer, such as ask amounts, number of asks, or the way the offer is stated.

Outside of those areas, you’re often in danger of learning nothing if you test.

Related blog: The Weird Power of Long Fundraising Letters

Uncover the techniques that will dramatically increase donations to your cause! It’s all in Jeff Brooks’ Masterclass; Irresistible Communications for Great Nonprofits

The test is poorly defined

I once encountered a direct mail test that supposedly tried to test “donor centric” copy against “traditional” copy.

“Traditional” had done better, by a wide margin. I was surprised, so I took a close look back at the test. Turned out there was a distinct lack of clarity about what “donor centric” and “traditional” meant. In this case, the “donor centric” copy was dry, factual, and unemotional. “Traditional” had a strong story, and very emotional copy.

What led someone to think donor centric meant unemotional is a mystery to me. I would have labelled them exactly opposite.

Related blog: So You Think You’re Donor Centric?

The problem in that test was the terms weren’t clearly (or intelligently) defined.

Direct response testing needs to use the scientific method: Have a clearly stated, agreed-on by everyone hypothesis in writing. State exactly what the test is seeking to learn. After the test runs, have a good post-mortem to make sure everyone is clear about the results.

It’s testing something everyone already knows

The majority of testing is of things professionals already know.

- Long messages outperform short ones.

- Need-based messages do better than past-success-based messages.

- Sad photos perform better than happy ones.

- Simple, old-fashioned design is stronger than slick, modern looks.

Things like these get tested again and again and again because people don’t believe the experts. It’s not a good use of money. If you have access to expert experience (and if you know how to read you have plenty of access), you should spend your testing learning something you don’t already know.

Another similar form of wasted testing is things we all know rarely make a difference, like: Who signs the letter; outbound postage treatment; minor color or font differences; small changes in copy.

I know we are often forced to test these things — I’ve tested them all many times. So here’s a plea to the sceptical bosses who demand these tests: Folks, listen to your experts! You can save a lot of time and money!

Spend Your Testing Wisely

Every test is a non-renewable resource. You never have that opportunity again (Yes there’s next year, but this year is gone forever!)

Spend this precious resource learning things you need to know!

P.S. Want to learn all the techniques that have enabled Jeff Brooks to raise millions of dollars for good causes? Check out his Masterclass; Irresistible Communications for Great Nonprofits. It’s available for all members of The Fundraisingology Lab.

P.P.S Please share your experience with direct-response testing by leaving your reply below. We’d love to learn from your experience.

5 Comments. Leave new

I think you’re making a really good point. You’ve told us what not to test. So my question is what makes a good test? Do you have a few examples?

As noted above, here are some usually good categories of testing:

– What’s on the outer envelope

– The subject line

– The physical specs of a direct mail piece

– The offer

– Elements of the offer, such as ask amounts, number of asks, or the way the offer is stated.

The most impactful test is the outer envelope. The area where you can learn the most long-reaching important stuff is offer and offer elements. Beyond that, it’s about things you need to learn!

Great article! In relation to your point about the test size being too small, what do you suggest for organisations who have a small direct mail base so don’t have the volume to increase test sizes?

In my opinion, if your numbers are too small to give meaningful test results, you should not test. Instead, pay attention to what others test. Maybe become friends with someone at a larger organization and see if you can get them to test things you need to know. Good luck!

Yeah we did some tests, varying the ‘premium’ feel, nicer envelope, less lifts, etc. Groups from 500 to 1800. But we only did the statistical significance test once the results came back, and found any differences were not statistically significant. I don’t think that’s the same as confidence levels? Still showed some differences that seemed worth continuing, like spend more on your top donors. But it’s not like we needed the test to tell us that – you guys tell us that all the time!